Dragonfly

Jayesh Pillai | Azif Ismail | Manvi Verma

Dragonfly is a VR Film with stereoscopic 3D and spatial audio.

Synopsis: The film is a tale of waiting. Aisha longs for the return of her lover, who has undergone a grievous incident. While she holds onto a glimmer of hope, her patience begins to test her. ‘Dragonfly’ wishes to immerse the viewer in an experience that fluctuates between Aisha’s reality, memories and hopes.

Official Website : dragonflymovie.in

Trailers : Trailer (2D), Trailer (3D)

Cast & Crew : Dragonfly Film Details

Design Process

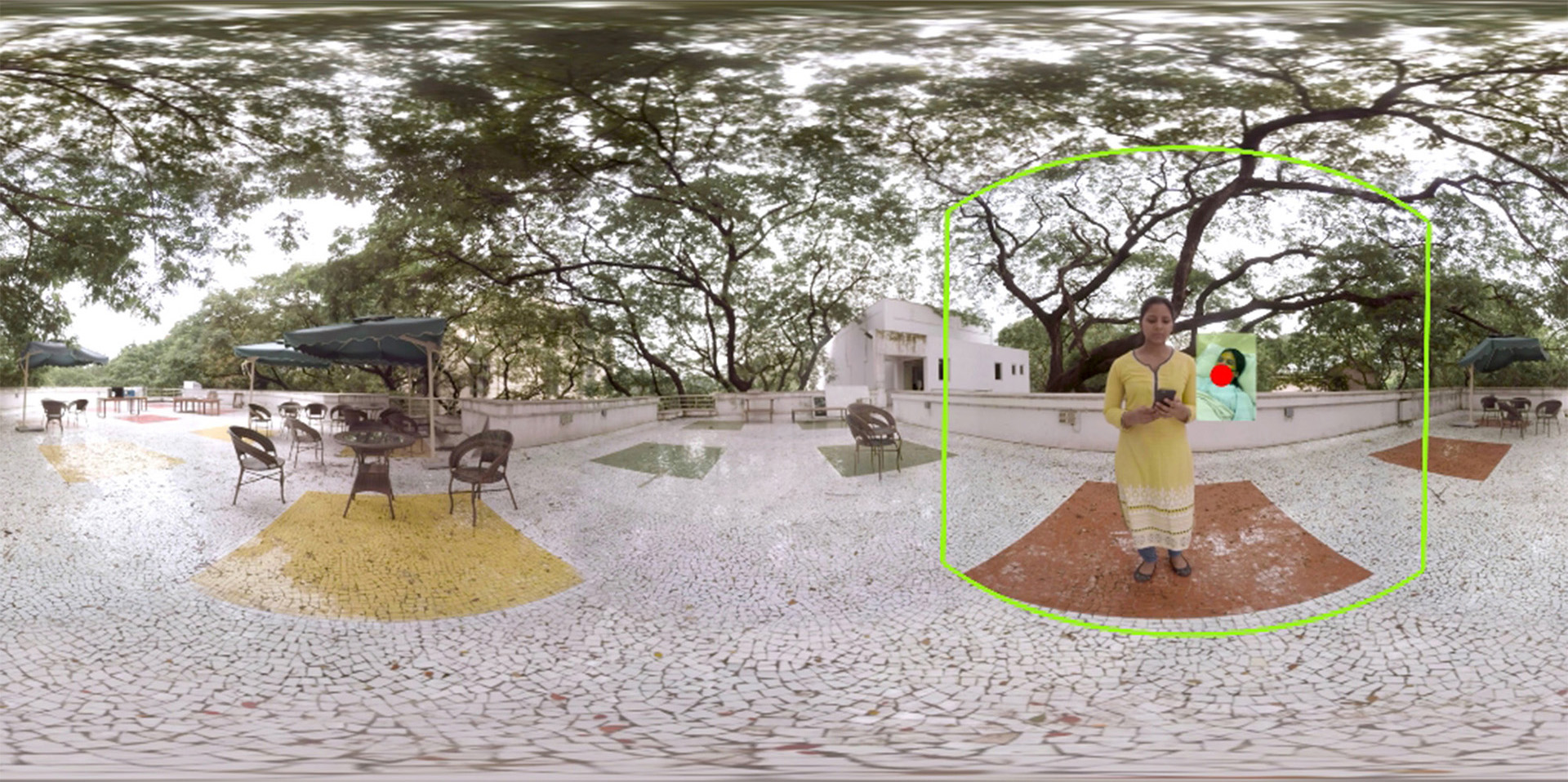

This VR film was created as part of the ongoing research work on “Understanding the Grammar of VR Storytelling”. The film experience was designed keeping in mind the insights from our previous study that looked into the effects of visual cues in 360° narratives.

Here’s a brief description of the process followed for creating this VR film.

1. Pre-Production

1.1. Ideation

Story & Screenplay : The concept was developed with considerations, such as the medium of storytelling, the potential of the 360° space and the manner in which one would experience the narrative. The experience was conceived with the thought that it would be used for investigating the visual and audio cues, and how they may help in directing one’s attention within the immersive environment of the narrative. Accordingly, the screenplay was created, however, in a traditional manner to begin with.

Storyboarding : A few storyboards were created to understand the spatial nature of the shots within each scene. At this point, it was decided to have all the shots static without any camera movement, as well as to have a total of two single-take scenes separated by a transition.

1.2. Narrative Structure

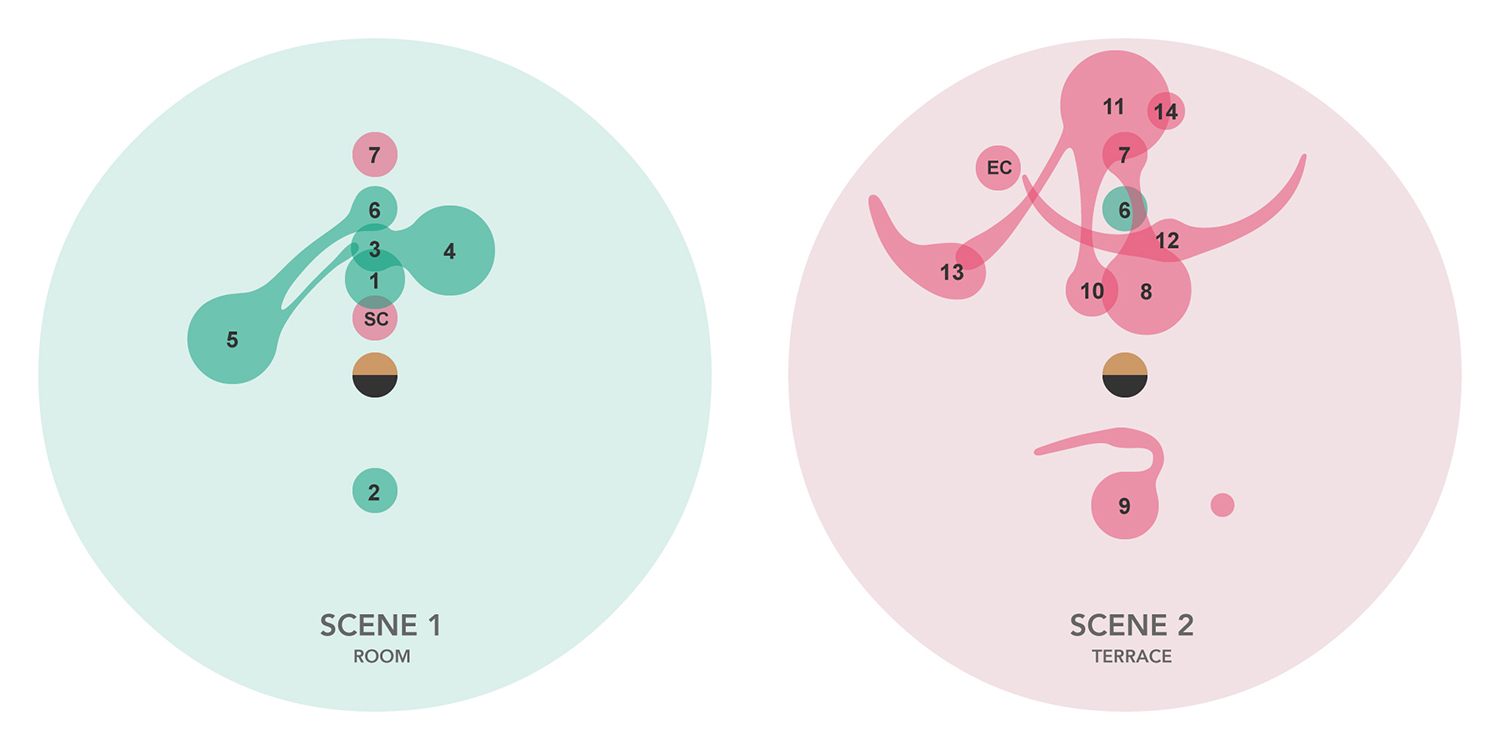

Space - Zones of Action : To better understand the story in a spatial sense, a top view of the scenes depicting the story world was created. It was helpful in planning the plot-points in a spatial manner (see images below).

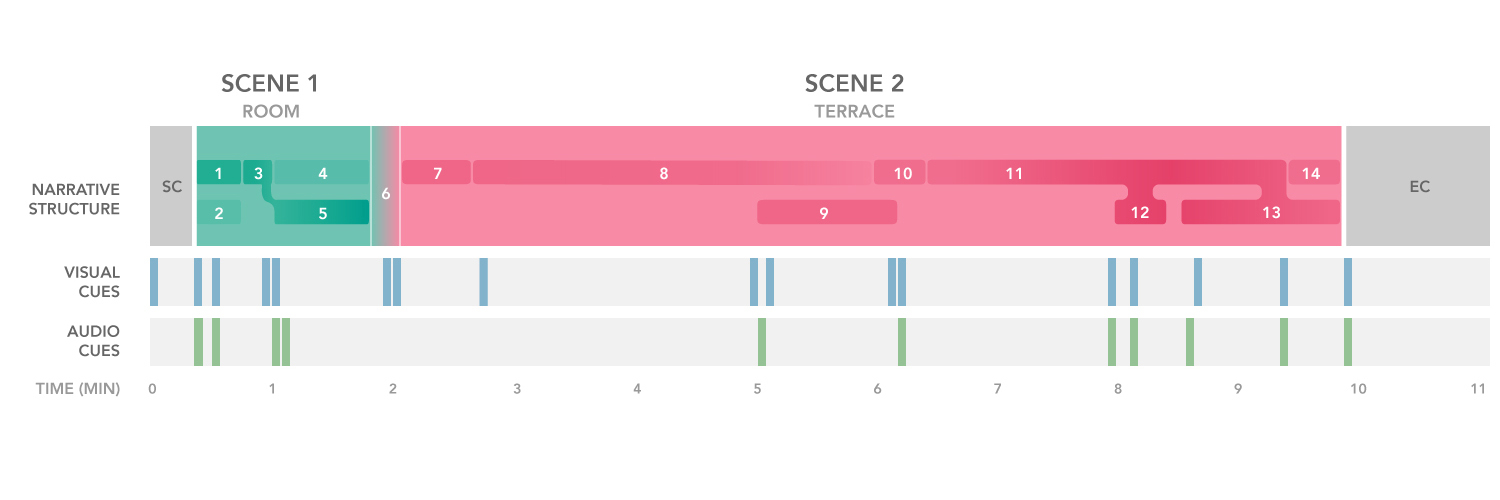

Time - Cuts & Transitions : In order to plan the narrative with parallel plot-points in a temporal manner, the narrative was visualised as a timeline. The two scenes, the starting and ending credits, along with potential visual and audio cues to prompt attention shifts from one plot-point to another can be seen in the image below.

1.3. Equipment Selection

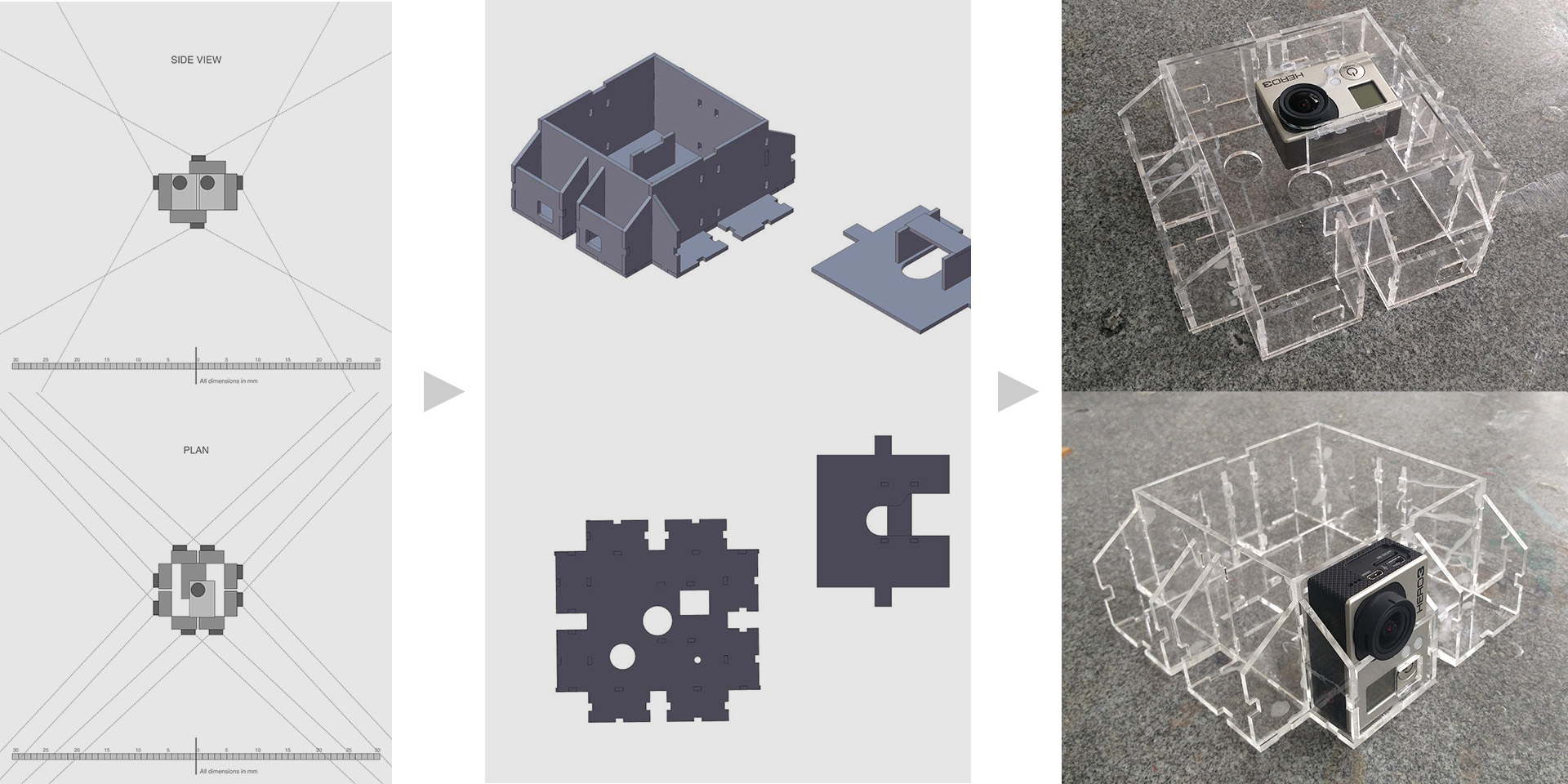

Camera & Audio Equipment : As the VR film was to have stereoscopic 3D, it was decided to use hi-resolution GoPro cameras for recording the videos, which would later be stitched into equirectangular images. For this purpose, a camera rig was designed and fabricated at IDC School of Design to hold ten cameras (see images below). For recording the dialogues during the permormance, a wireless microphone system was chosen for remote monitoring.

1.4. Planning the Production

Casting & Location Search : As per the characters in the story, the casting of actors was done. Locations were found that were not just appropriate places that correspond to the environments in the story but also allow for strategically plan the 360° panoramic shots (see images below).

Set & Lighting Design : The first scene was to be shot in an interior set for which artificial lighting was planned. The second scene was to be shot in an exterior set, relying on natural lighting.

Rehearsals, Tests & Shooting Logistics : The actions were choreographed to be filmed in one-take. To better prepare for the shooting, rehearsals with the actors were conducted on the set (see images below).

2. Production

2.1. Camera Setup

Static vs Moving Gear : As mentioned above, the scenes were planned to be static. However, the story required a section of the first scene to have an interior view of a moving vehicle, which required certain moving shots to be filmed. These shots were recorded using the same rig on a moving bike, which were later composited accordingly to make the vehicle appear to be moving.

2.2. Monitoring the Performance

No “Behind the camera” : As the video records the entire 360° space, the concept of directing from behind the camera is not the same anymore.

Live Feed : The director and crew members ideally would have to be outside the visual field of the camera, monitoring the performances remotely through a live video feed. However, the narrative structure of this film provided us with opportunities to monitor performances in-person, without the requirement of a live feed.

2.3. Reference Images

Removing Equipment & Crew : Several reference images were clicked during the shooting to help remove visible equipment and crew from the video footage (in the post-production phase).

2.4. Sound Recording

On-Set & Studio : For the recording of primary dialogues, we relied on sync sound. Additional dialogues and sounds were later recorded in the studio and combined in post-production.

3. Post-Production

3.1. Video Stitching

Automatic vs Manual Stitching : 360° cameras often provide options for automatically stitched video outputs. For rigs that use multiple cameras such as ours, there are several software that help in stitching the videos together. We relied on After Effects for manually stitching.

3.2. Editing

Equirectangular Videos : Videos were recorded simultaneously from a total of 10 cameras. While eight cameras captured the horizontal portion of the 360° space, one camera each captured the top and bottom of the space. Images from a set of six cameras facing in six different directions were stitched together to create an equirectangular image.

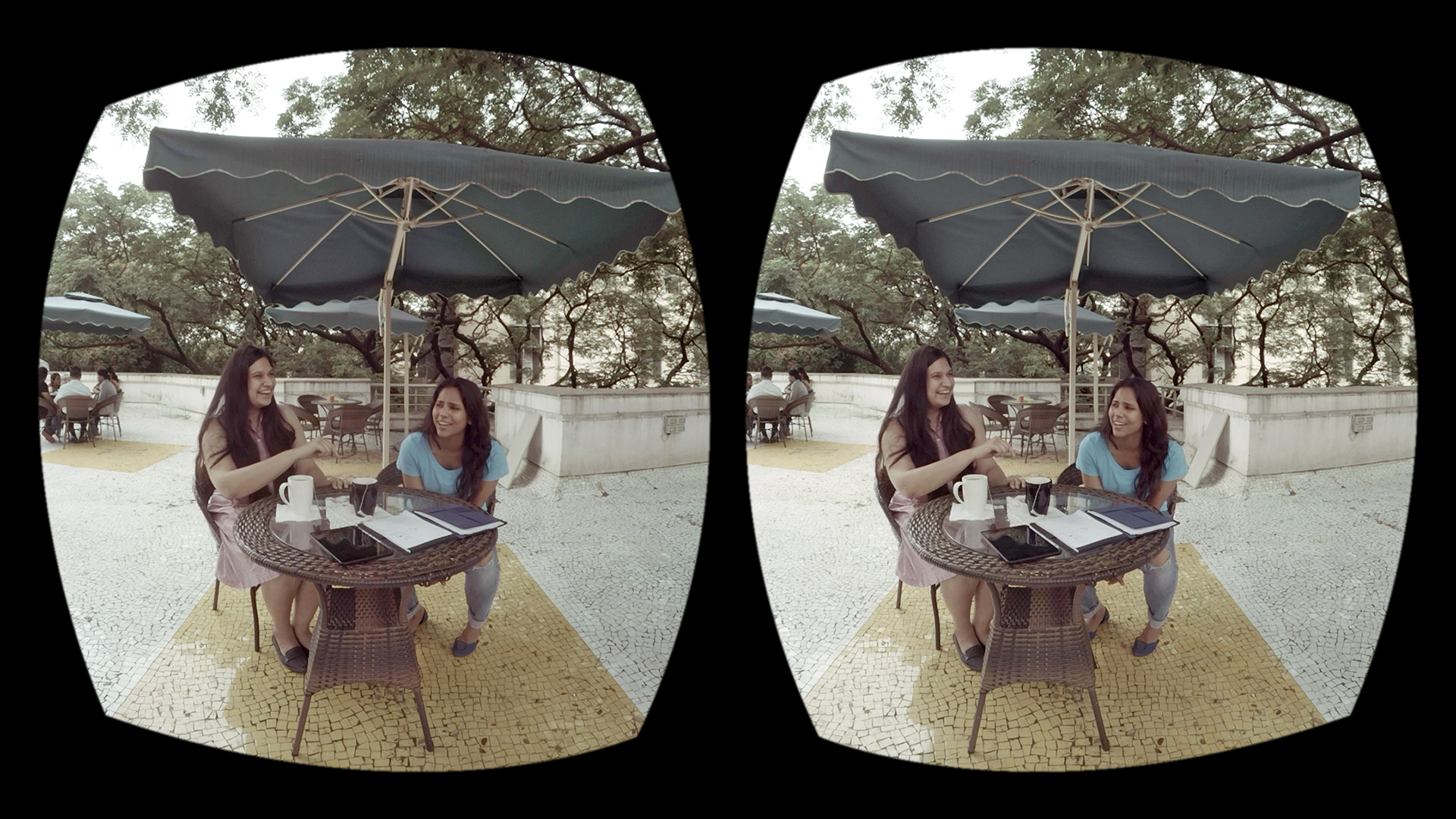

Stereoscopic 3D : For each equirectangular image for the left eye, there is a corresponding equirectangular image for the right eye. These stereoscopic videos create an illusion of depth and provide a sense of three-dimensionality to the panoramic space when experiencing the film using a VR headset.

Spatial Audio : The sounds were edited using FB360 Spatial Workstation to enable interactive head-tracked spatial sound. The entire audio content was then converted to a first-order ambisonic format to accompany the 360° video.

3.3. VFX & Additional Graphics

With respect to the narrative, visual effects, wherever required was added. CGI elements were carefully composited into the equirectangular (left & right) pairs. Additionally, starting and ending credits were designed.

3.4. Visual Identity Design

For creating an identity for the film and connected materials a set of visual elements were designed. Accordingly, this identity was incorporated in all promotional materials like the Dragonfly website, trailers and music video.

4. Publishing

4.1. Channels of Diffusion

The VR film was planned to be made available on YouTube 360 open platform due to its support for stereo 3D and spatial audio.

4.2. Mode of Experience

For the research experiments as well as for general screenings, participants were invited. The film was presented on high-end VR headsets such as HTC Vive and Oculus Quest. The film experience on mobile VR headsets was also tested.

The VR Film - Dragonfly

For the best experience, use a VR headset and headphones.

Learnings

We conducted experiments to understand how visual and audio cues work in VR narratives. Our learnings, insights and propositions have been presented at VRCAI 2019 and CVMP 2019. Additionally, a few of the insights were presented during an invited talk at SIGGRAPH Asia 2019 (Narrative Visualisation - Birds of a Feather), titled “Towards Guidelines for VR storytelling”.

Eye-tracking data ●

Point of View (POV) □

Intended Experience ●

Publications

-

Pillai J.S. and Verma M. (2019). “Grammar of VR Storytelling: Analysis of Perceptual Cues in VR Cinema”, in: The 16th ACM SIGGRAPH European Conference on Visual Media Production, 2019, London, UK.

-

Pillai J.S. and Verma M. (2019). “Grammar of VR Storytelling: Narrative Immersion and Experiential Fidelity in VR Cinema”, in: 17th ACM SIGGRAPH International Conference on Virtual Reality Continuum and Its Applications in Industry (VRCAI) 2019, Brisbane, Australia.

Epilogue: Music Video

Dragonfly [Unplugged]

Here’s a music video that was created for the promotion of the VR film.